Evaluating Eventbrite's Navigation Experience

Eventbrite • UX Research

Overview

Eventbrite is a global self-service ticketing platform for live experiences that allows anyone to create, share, and attend events that fuel their passions and enrich their lives.

I joined Eventbrite in July 2019 as a Design Research intern and became a full-time researcher in February 2020. Since then, I've played a key role in supporting the expansion and growth of our UXR team and the development of our internal research processes. I have experience leading mixed methods research through multiple stages of the product development lifecycle on both the event creator and consumer side of Eventbrite's products.

This project details the mixed methods research study I led on the navigation and information architecture of Eventbrite's core event management product creators use to host events on our platform. My research served as foundational research for our New Navigation redesign rollout in Q1 2022.

Question

"How does the current information architecture of Event Manage support event creators in feature discoverability?"

Outcome

- Led an evaluative research study to evaluate the information architecture of the event-level navigation ("Left Nav") to identify current pain points and opportunity areas

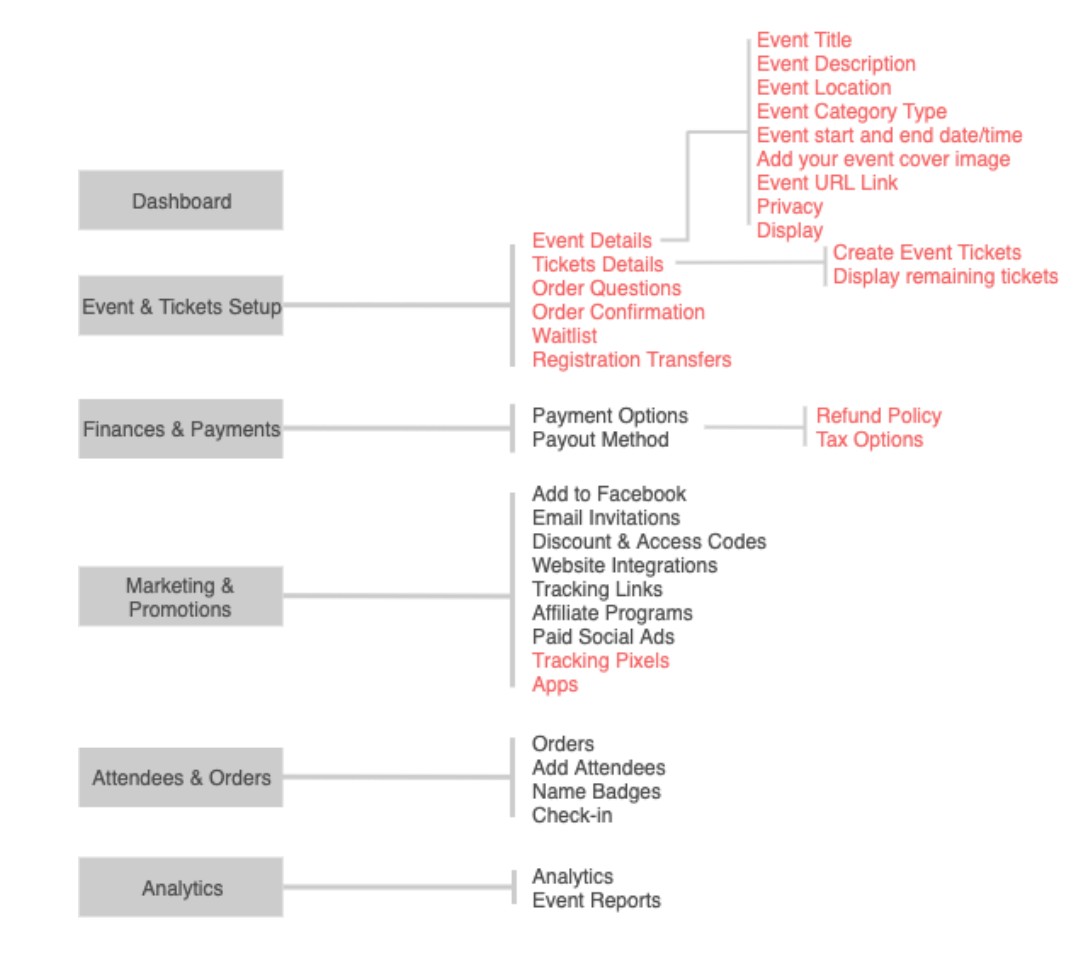

- Developed and proposed a new information architecture with improvements made by research insights to product and design leadership

- Advocated and influenced product partners the importance of research as an integral part of the product development life cycle

Methods

Heuristic Evaluation

Competitive Analysis

5 Tree-testing studies (Competitive tree testing)

2 open card sorts

Internal Interview with CX Rep

Impact

I delivered a report on the baseline metrics and key challenges that laid the foundation for improvements as well as an improved version of the IA with notable measurable results that product teams use to build off of. The impact of my work led to product leadership's decision to create a new squad fully dedicated to improving the overall information architecture and navigation experience of our core product and kickstarted a new redesign initiative at the start of Q1 2020. This team recently rolled out our new navigation experience in Q1 2022.

Process

The main research objective was to evaluate the information architecture of the event-level navigation (Left Nav) to identify current pain points and opportunity areas to improve feature discoverability. I broke down the research into the following components:

Phase 1: Understand the Competitive Landscape

To understand how our current navigation experience compared to competitors and industry standards to identify areas we may fall short, I conducted a competitive analysis looking at five other major competitors and researching their product information architecture and navigation experiences.

Additionally, as a part of the competitive analysis, I conducted a heuristic evaluation to evaluate our Left Nav IA and the event creation environment against industry-wide UX heuristics. I then cross-compared my findings with additional evaluation of the navigations of other competitors. The result of this was a report on key areas where our current navigation failed to meet certain heuristics and industry standards as well as a comparison against our competitors.

Phase 2: Understand User Mental Models

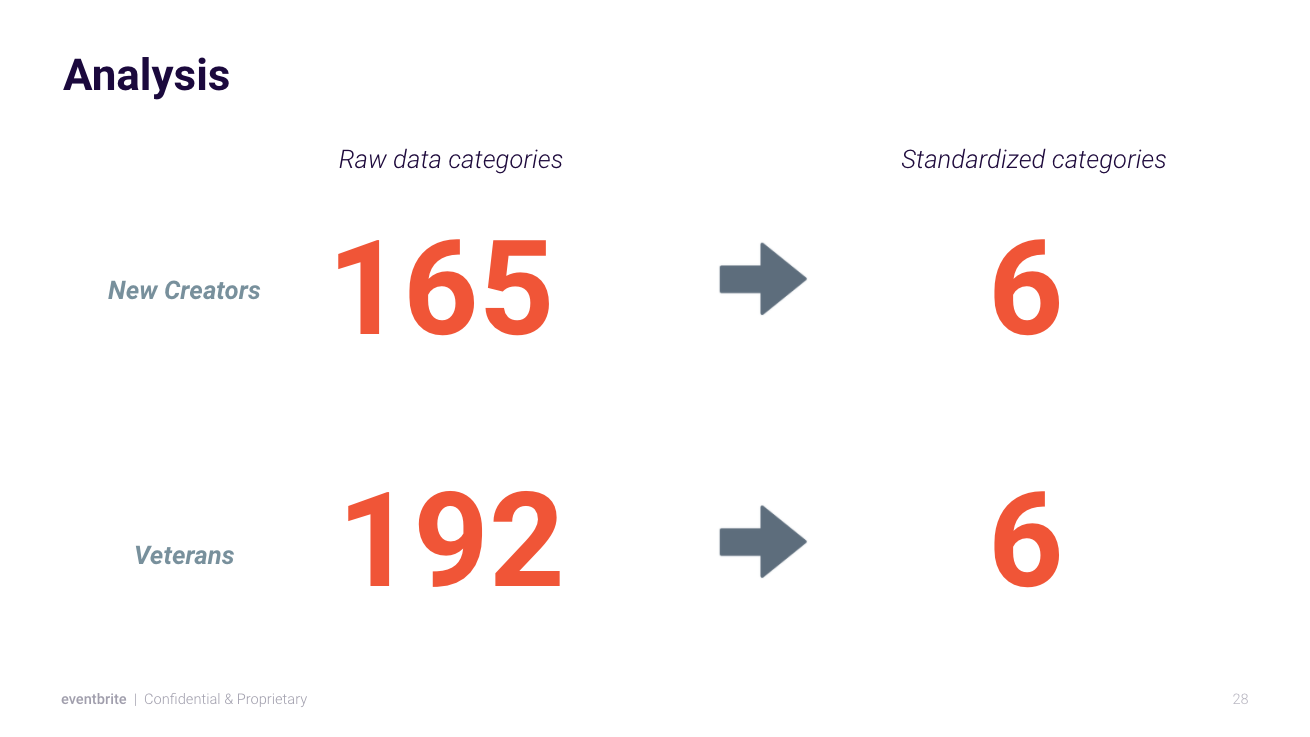

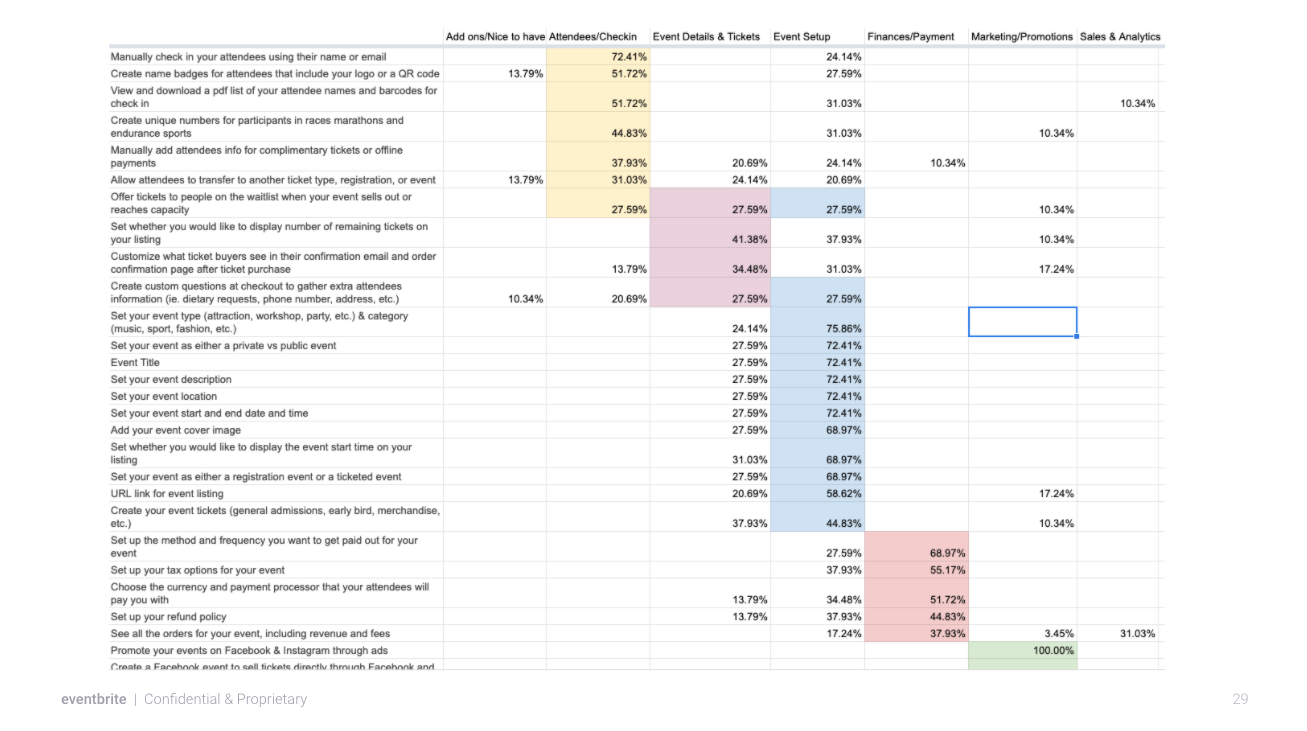

In order to understand how event creators think about the groupings and nomenclature of our product, I ran two separate open card sorts, each with a different user segment.

The first group consisted of new creators with at least a year of experience hosting events but have never used Eventbrite before. This group represents new creators coming onto our platform for the first time with the potential to become return users. The second group consisted of "Eventbrite veterans" who have had more than 2 years of experience hosting on our platform. Group 2 represents long-term creators who stay on Eventbrite.

Comparing these two groups allowed me to identify any significant differences between the mental models of new users who have not had exposure to our product vs. those who have had that exposure.

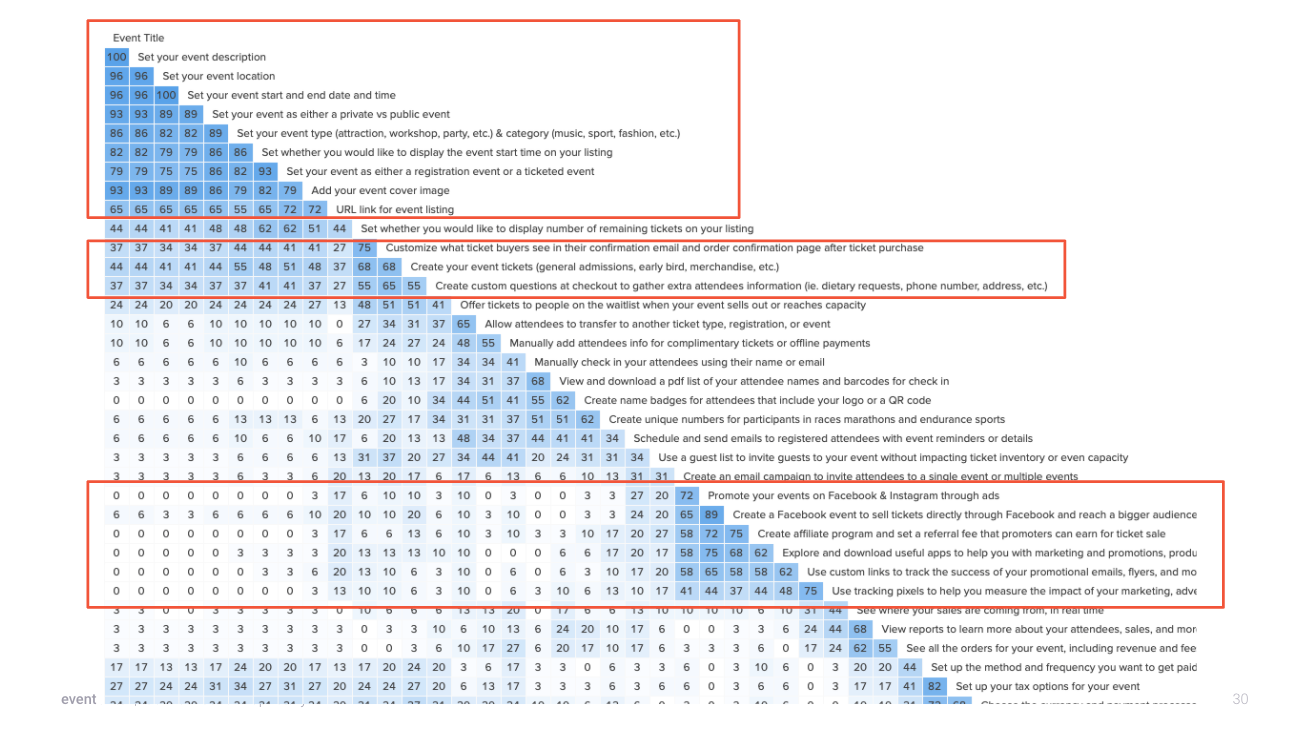

For the analysis, I used a common data analysis technique called Topic Normalization to merge similar participant-created categories based on terminology comparison and content analysis. I was able to clean the raw data from 150+ categories created by participants down to 6 standardized categories for each card sort group. From my analysis, I learned that the two user groups shared similar mental models. However, the groupings and nomenclature found in these mental models contrasted from the Left Nav information architecture.

Left Nav IA (left side) vs. creator mental model (right side)

Phase 3: Understand Where the Experience Breaks Down

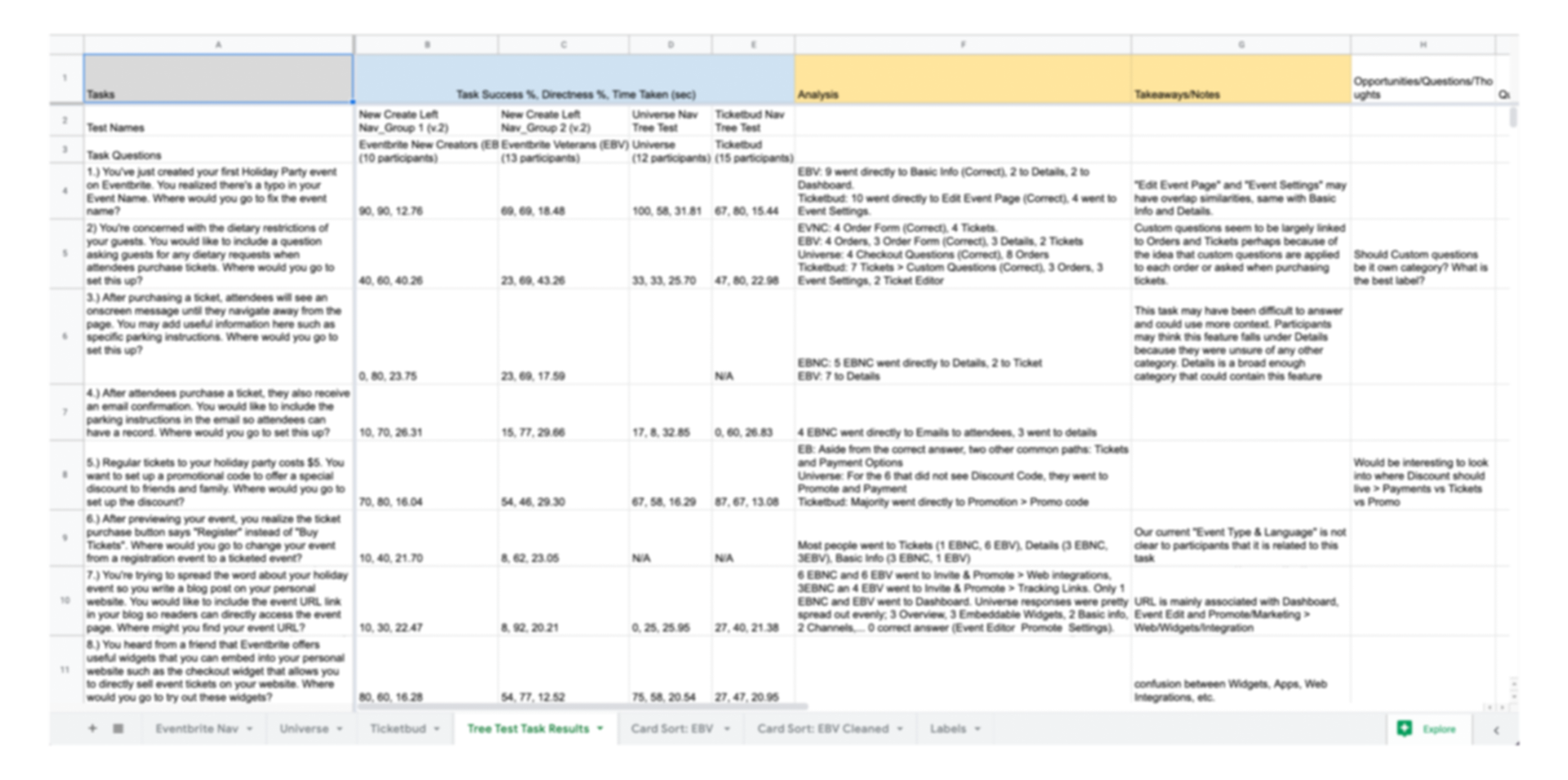

To identify problem areas within the information architecture, I ran a competitive tree test study on Eventbrite's IA against two other major competitors. The results of the tree tests highlighted specific problem areas across all three different IA's. I also compared the performance of Eventbrite's IA between new vs. old users and learned that there was no significant difference in performance between the two groups, suggesting that our IA was not as learnable as we had thought.

From synthesizing the learnings from all three phases of the study, I created an improved version of the Eventbrite's IA. I then ran the same exact tree test on the new version and the result was a 104% increase in task success compared to the original (from 31% to 64%). The improved version also performed higher than the two other competitors, suggesting the changes made increased feature discoverability. By conducting a competitive tree tests, it allowed us to benchmark the improved IA against the original Left Nav and our two competitors.

For a more in-depth look into my work, take a look at my presentation below!